MENU

Streaming

We can return a result from route.js simultaneously as the result is being generated, before it is completed.

Here we pass the periodic output from an interator to ReadableStream() to simulate streaming.

Streaming is often utilized alongside Large Language Models (LLMs), like those from OpenAI, for generating AI content.

route.tsx:

route.tsx:

import OpenAI from 'openai'

import { OpenAIStream, StreamingTextResponse } from 'ai'

const openai = new OpenAI({

apiKey: process.env.OPENAI_API_KEY,

})

export const runtime = 'edge'

export async function POST(req: Request) {

const { messages } = await req.json()

const response = await openai.chat.completions.create({

model: 'gpt-3.5-turbo',

stream: true,

messages,

})

const stream = OpenAIStream(response)

return new StreamingTextResponse(stream)

}

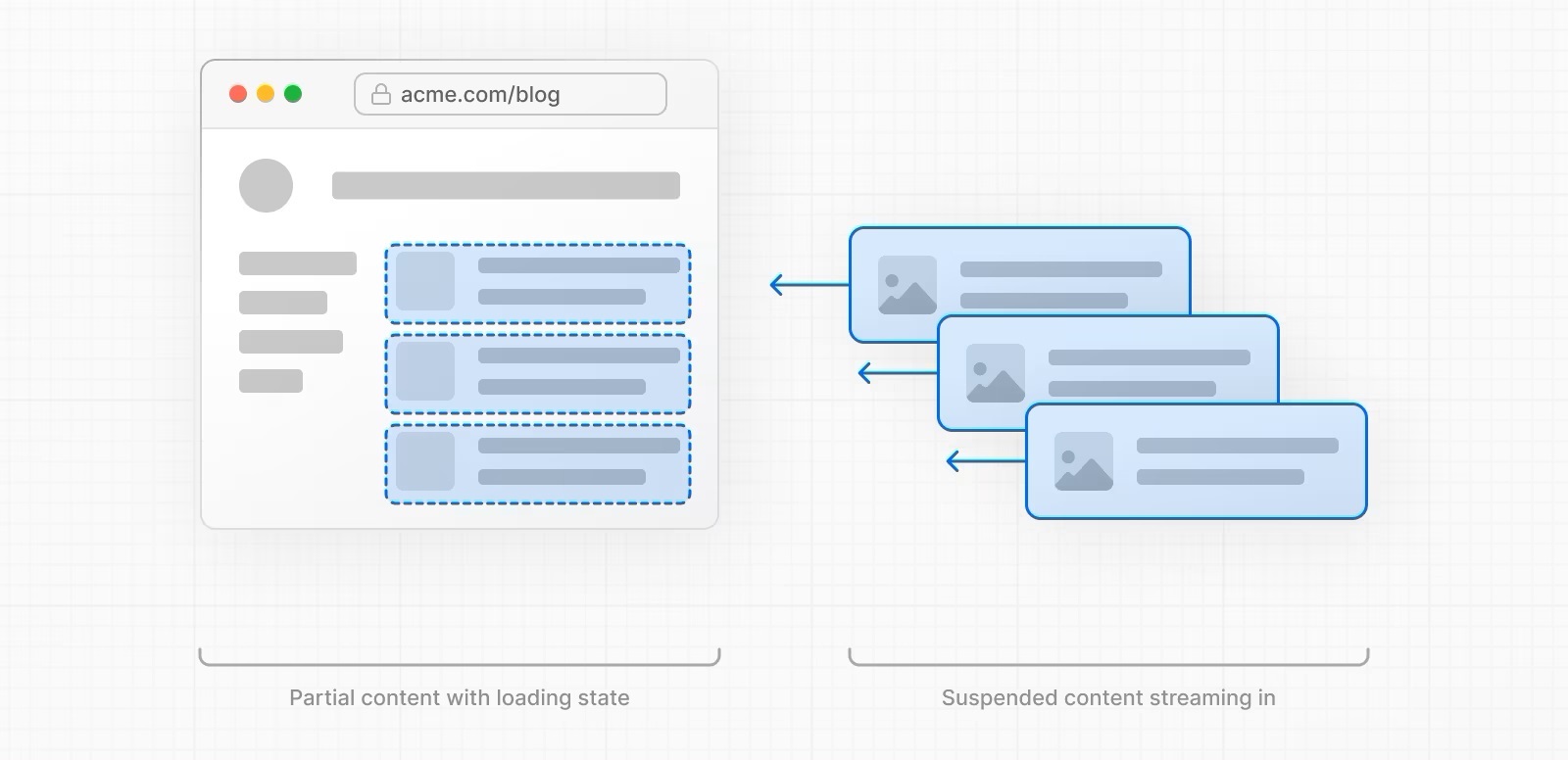

Streaming with <Suspense> progressively renders HTML from the server to the client and prioritizes what components to hydrate and make interactive first based on user interaction.